Real-World Efficacy Calculator

How Your Health Profile Affects Results

Clinical trials have strict inclusion criteria that exclude many patients. This calculator shows how your personal factors might impact treatment effectiveness compared to trial results.

Your Estimated Real-World Effectiveness

0%

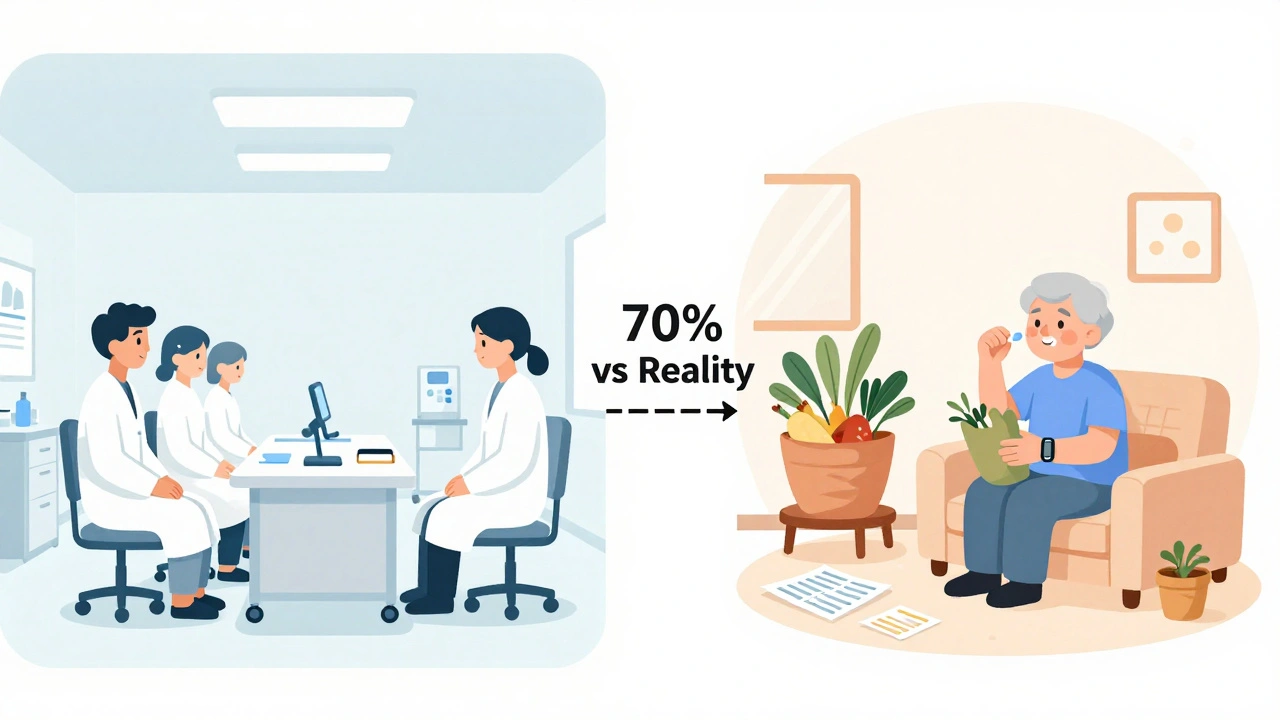

When a new drug hits the market, you hear about its 70% success rate in clinical trials. But does that mean it will work the same way for your neighbor, your parent, or someone with multiple chronic conditions? The truth is, clinical trial data and real-world outcomes tell very different stories - and understanding the gap between them can change how you think about treatment.

Why Clinical Trials Don’t Reflect Real Life

Clinical trials are designed like controlled experiments. Patients are carefully selected: no other major illnesses, strict age limits, no medications that might interfere, and often, they need to live near a major hospital. In fact, studies show that up to 80% of people who could benefit from a new treatment are excluded from these trials. That means the people in the trial are not representative of the people who will actually use the drug. Take diabetes and kidney disease. A 2024 study compared 5,734 patients in clinical trials with over 23,000 patients in real medical records. The trial group was much healthier - fewer had heart disease, less obesity, and far fewer were over 70. Their data was collected every three months, perfectly timed, with almost no missing records. In real life? Data comes in spurts - when someone visits the doctor, when a lab result comes in, when a wearable tracks their heart rate. Only 68% of the real-world data was complete. That’s not negligence - it’s reality.What Real-World Outcomes Actually Show

Real-world outcomes come from places like electronic health records, insurance claims, patient registries, and even smartwatches that track activity or sleep. These sources capture what happens when a drug is used by real people - with real lives. Someone might miss a dose because they’re working two jobs. Another might skip follow-ups because they can’t afford the bus fare. These aren’t flaws in the data - they’re the data. A 2023 study in the New England Journal of Medicine found that only 20% of cancer patients in academic centers would qualify for a typical clinical trial. Black patients were 30% more likely to be excluded - not because their cancer was worse, but because of barriers like transportation, distrust in the system, or lack of access to specialized care. That doesn’t mean the drug doesn’t work. It means we don’t know how well it works for them. Real-world data also reveals side effects that never showed up in trials. A drug might cause fatigue in 2% of trial participants - but in real life, with older patients on five other medications, that number jumps to 18%. These aren’t surprises. They’re warnings we missed because the trial didn’t include the people most likely to experience them.

The Trade-Off: Precision vs. Practicality

Clinical trials are great at answering one question: Does this treatment work under perfect conditions? They use randomization, blinding, and strict protocols to remove bias. That’s why regulators like the FDA still require them before approving a new drug. But real-world data answers a different question: Does it work in the messy, complicated world where people live? That’s where it shines. Real-world evidence helped prove that certain heart medications reduced hospital stays for elderly patients with multiple conditions - something trials couldn’t test because those patients were excluded. The problem? Real-world data is messy. It’s incomplete. It’s biased. Someone who uses a fitness tracker might be more health-conscious than the average patient. Someone who visits a clinic regularly might be better off than someone who only shows up in an emergency. That’s why researchers use tools like propensity score matching - statistical tricks to balance out differences between groups. But even then, you can’t control for everything. If a hidden factor - like stress levels or diet quality - affects both treatment use and outcome, you might draw the wrong conclusion.Regulators Are Catching Up

The FDA didn’t always accept real-world data. But since the 21st Century Cures Act in 2016, they’ve started using it - especially for post-market safety monitoring. Between 2019 and 2022, they approved 17 drugs partly based on real-world evidence. That’s up from just one in 2015. The European Medicines Agency is even further ahead - 42% of their post-approval safety studies now use real-world data, compared to 28% at the FDA. Payers are pushing too. UnitedHealthcare and Cigna now require proof of cost-effectiveness before covering expensive new drugs. Real-world data helps them answer: Does this drug actually reduce hospital visits or emergency care? If it doesn’t, they won’t pay for it - no matter what the trial said. But there’s a catch. A 2023 study in Nature Communications found that 63% of attempts to combine clinical trial data with real-world data fail because the two types of data are built differently. You can’t just paste one into the other. You need careful alignment - matching variables, adjusting for timing, accounting for how data was collected. Without that, you get garbage in, garbage out.Cost, Speed, and the Future

Running a Phase III clinical trial costs an average of $19 million and takes two to three years. Real-world studies? They can be done in six to twelve months for 60-75% less money. That’s why companies like Flatiron Health spent $175 million and five years building a database of 2.5 million cancer patients - so they could answer questions trials never could. The global real-world evidence market is expected to grow from $1.84 billion in 2022 to $5.93 billion by 2028. Oncology leads the way - because cancer trials are expensive, slow, and often unethical to use placebos. Rare diseases are next - because you can’t find enough patients for a traditional trial. The future isn’t about replacing clinical trials with real-world data. It’s about using both. Hybrid trials now exist - where patients start in a controlled trial but continue being tracked in real life after approval. AI is helping too. Google Health’s 2023 study showed AI could predict treatment outcomes from electronic records with 82% accuracy - better than traditional trial analysis.What This Means for You

If you’re a patient, don’t assume a drug that worked for 70% of trial participants will work the same for you. Ask your doctor: Who was in the trial? Were they like me? Did they have other health problems? Were they older? Did they take other meds? If you’re a caregiver, understand that side effects you see at home might not have been reported in the trial. That doesn’t mean the drug is dangerous - it means the trial didn’t capture your reality. And if you’re following health news, be skeptical of headlines that say, “New drug cures 80% of patients!” That’s the trial number. The real-world number? It’s probably lower. And that’s okay - because real-world evidence isn’t about perfection. It’s about truth.Why don’t clinical trials include older or sicker patients?

Clinical trials exclude older or sicker patients to reduce variables that could cloud the results. If someone has kidney disease, heart failure, and diabetes, it’s hard to tell if a new drug helped or if one of their other conditions caused a change. But that means the results don’t reflect how most people actually use the drug. This isn’t about safety - it’s about control. The trade-off is that we get clean data, but it’s not always relevant.

Can real-world data replace clinical trials?

No - not yet. Clinical trials are still the gold standard for proving a drug works under ideal conditions. Real-world data can’t control for bias the same way randomization and blinding can. Regulators like the FDA still require trial data before approval. But real-world data is now used to confirm long-term safety, monitor side effects, and show whether a drug works in everyday practice. They’re partners, not rivals.

Are real-world studies less reliable than clinical trials?

Not necessarily - but they’re different. Clinical trials are more reliable for proving cause and effect. Real-world studies are more reliable for showing what actually happens in daily life. The problem comes when people treat real-world data like it’s a clinical trial. Without proper statistical methods to correct for bias, real-world studies can give misleading results. That’s why experts warn that enthusiasm for real-world evidence has outpaced the methods to use it correctly.

Why is real-world data so expensive to use if it’s cheaper to collect?

Collecting the data is cheap - pulling it from electronic records or insurance claims doesn’t cost much. But making it usable is expensive. Health systems use dozens of different software platforms that don’t talk to each other. Data is messy, incomplete, and inconsistently labeled. Cleaning it, linking it across sources, and applying advanced statistical models requires specialized teams - and most healthcare organizations don’t have them. That’s why only 35% of organizations have dedicated real-world evidence teams.

How do drug companies use real-world data today?

Drug companies use real-world data to design better trials - selecting patients more likely to respond or stick with treatment. They use it to prove cost-effectiveness to insurers. And they use it to monitor safety after approval. For example, if a drug causes rare liver damage that only shows up after six months, clinical trials won’t catch it. Real-world data can. Companies like Roche and Pfizer now invest heavily in real-world databases because they help them prove value beyond the trial.

Kylee Gregory

December 5, 2025 AT 07:26